Make Big Data Work for You

The information age gives businesses of all kinds’ access to Big Data that`s growing in volume, variety, velocity & complexity. With more data coming from more sources faster than ever, the question is: what is your Big Data strategy? How are you combining new and existing data sources to make better decisions about your business? How could new data sources including social, sensors, location and video help improve your business performance? Will your Big Data remain dormant or will you make it work for you?

As your trusted partner, Digital Minds Technologieswill work to help you bring order to your Big Data. With proven expertise in mature technologies and thought leadership in those that are emerging, our team of senior-level consultants will help you implement the technologies you need to manage and understand your data - allowing you to predict customer demand and make better decisions faster than ever before. Whatever you’re Big Data challenges are, we`ll provide you the strategic guidance you need to succeed.

We are tried and tested Big Data industry veterans with years of direct use case experiences. We tailor our services to your exact business requirements out of Big Data

NoSQL Databases:

- MongoDB

- Cassandra

- Solr

Hadoop Ecosystem Technologies:

- Cloudera

- Hortonworks

- HBase

- Hive

Our services include:

- Expert Implementations

- Hadoop solutions designed by Hadoop-certified consultants

- Big Data Analytics

- Business-level Big Data strategy consulting

Big Data Architecture Capabilities

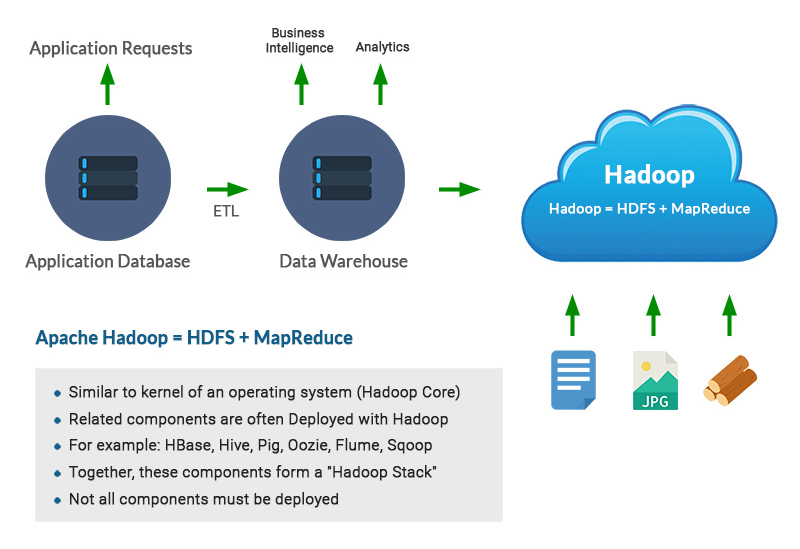

Apache Hadoop is an open-source software framework for storage and large-scale processing of data-sets on clusters of commodity hardware. Hadoop is an Apache top-level project being built and used by a global community of contributors and users.

Hadoop Distributed File System (HDFS):

- An Apache open source distributed file system

- Expected to run on high-performance commodity hardware

- Known for highly scalable storage and automatic data replication across three nodes for fault tolerance

- Automatic data replication across three nodes eliminates need for backup

- Write once, read many times

Cloudera Manger:

- Cloudera Manager is an end-to-end management application for Cloudera’s Distribution of Apache Hadoop

- Cloudera Manager gives a cluster-wide, real-time view of nodes and services running; provides a single, central place to enact configuration changes across the cluster; and incorporates a full range of reporting and diagnostic tools to help optimize cluster performance and utilization

Apache HBase:

- Allows random, real time read/write acces

- Strictly consistent reads and writes

- Automatic and configurable sharding of tables\

- Automatic failover support between Region Servers

Apache HBase:

- Data model offers column indexes with the performance of log-structured updates, materialized views, and built-in caching

- Fault tolerance capability is designed for every node, replicating across multiple datacenters

- Can choose between synchronous or asynchronous replication for each update

Apache Hive:

- Tools to enable easy data extract/transform/load (ETL) from files stored either directly in Apache HDFS or in other data storage systems such as Apache HBase

- Uses a simple SQL-like query language called HiveQ

- Query execution via Map Reduce

Hadoop

HDFS (Hadoop Distributed File System) is a distributed fault-tolerant file system designed to be deployed on low cost commodity hardware. HDFS provides high throughput access to large amounts of application data.

HDFS is not a file system which requires expensive fast disk drives with RAID (Redundant Array of Independent Disks) to provide high throughput and fault tolerance.

Digital Minds Technologiesleverages its deep business domain and technology expertise to help enterprises identify business use cases, evolve the information management & big data strategy. Our experts define the architecture, evaluate & recommend the required enabling technologies in support of the business goals. We then translate the strategy into manageable phased plans providing incremental business capabilities and results.

With our industry leading experience in conventional BI systems and emerging big data technologies we define the architecture and blueprints to ensure the proper alignment of architecture components to deliver the foundation for enterprise information need. Our experts transform the strategy into blueprint including how to optimally acquire process and store data for large-scale experimentation and visualization for business consumption. Our architecture encompasses both, the emerging big data technologies and existing BI systems to empower each other’s capabilities and in turn elevate the overall capabilities.

We have a long legacy of not just defining the right strategy and solution, but also a proven track record of executing on it. Our Implementation services include designing, building, testing and implementing all the components of the solutions.

Our business domain expertise enables us not just to define the key insights but also help enterprises adopt and leverage the insights to improve their business by making the information accessible, visually usable and easily embeddable in the business decision data flow